Robotics Industry Vision: The Convergent Evolution of Automation, Artificial Intelligence, and Web3

However, from the current stage of reality, this model is still in the early exploration phase, far from establishing a stable cash flow and a scalable commercial ecosystem. Most projects remain at the narrative level, with limited real-world deployment.

Original Author: Jacob Zhao

This independent research report is supported by IOSG Ventures, with special thanks to Hans (RoboCup Asia-Pacific), Nichanan Kesonpat (1kx), Robert Koschig (1kx), Amanda Young (Collab+Currency), Jonathan Victor (Ansa Research), Lex Sokolin (Generative Ventures), Jay Yu (Pantera Capital), Jeffrey Hu (Hashkey Capital) for their valuable suggestions. During the writing process, opinions and feedback were also sought from project teams such as OpenMind, BitRobot, peaq, Auki Labs, XMAQUINA, GAIB, Vader, Gradient, Tashi Network, and CodecFlow. This article strives to be objective and accurate, and while some views involve subjective judgments, deviations are inevitable. We appreciate readers' understanding.

1. Robot Landscape: From Industrial Automation to Humanoid Intelligence

The traditional robot industry chain has formed a complete bottom-up layered system, covering four major sections: Core Components - Intermediate Control System - Whole Machine Manufacturing - Application Integration. The Core Components (controller, servo, reducer, sensor, battery, etc.) have the highest technological barriers, determining the performance and cost floor of the entire machine; the Control System is the "brain and cerebellum" of the robot, responsible for decision-making planning and motion control; Whole Machine Manufacturing reflects supply chain integration capabilities. System integration and application are becoming a new core value.

According to application scenarios and forms, global robots are evolving along the path of "Industrial Automation → Scene Intelligence → General Intelligence," forming five major types: Industrial Robots, Mobile Robots, Service Robots, Special Robots, and Humanoid Robots.

· Industrial Robots: The only fully mature track currently, widely used in manufacturing processes such as welding, assembly, painting, and handling. The industry has established a standardized supply chain system, with stable gross margins and clear ROI. The subclass of collaborative robots (Cobots) emphasizes human-robot collaboration and easy deployment and is growing the fastest. Key companies: ABB, Fanuc, Yaskawa, KUKA, Universal Robots, Techman, Aubo.

· Mobile Robots: Including AGVs (Automated Guided Vehicles) and AMRs (Autonomous Mobile Robots), they have been widely deployed in logistics warehousing, e-commerce fulfillment, and manufacturing transportation, becoming the most mature category in the B2B sector. Representative companies: Amazon Robotics, Geek+ (Geek+), Quicktron (Quicktron), Locus Robotics.

· Service Robots: Targeting industries such as cleaning, catering, hotels, and education, this is the fastest-growing segment on the consumer side. Cleaning products have entered consumer electronics logic, while medical and commercial delivery services are accelerating commercialization. In addition, a group of more general-purpose operational robots is emerging (such as Dyna's dual-arm system)—they are more flexible than task-specific products but have not yet reached the universality of humanoid robots. Representative companies: Ecovacs, Stone Technology, Pudu Technology, Qihan Technology, iRobot, Dyna, etc.

· Specialized Robots: Mainly serving industries such as healthcare, defense, construction, marine, and aerospace, this segment has a limited market size but high profitability and strong barriers to entry. It heavily relies on government and corporate orders and is in a vertical niche growth stage. Typical projects include Intuitive Surgical, Boston Dynamics, ANYbotics, NASA Valkyrie, etc.

· Humanoid Robots: Regarded as the future "general labor platform." Representative companies include Tesla (Optimus), Figure AI (Figure 01), Sanctuary AI (Phoenix), Agility Robotics (Digit), Apptronik (Apollo), 1X Robotics, Neura Robotics, Unitree, UBTECH, Zymergen, etc.

Humanoid robots are currently the most prominent frontier direction. Their core value lies in adapting to existing social spaces with a humanoid structure, seen as a key form leading to the "general labor platform." Unlike industrial robots that pursue extreme efficiency, humanoid robots emphasize general adaptability and task transferability, enabling them to enter factories, homes, and public spaces without modifying the environment.

Currently, most humanoid robots are still at the technology demonstration stage, mainly to validate dynamic balance, walking, and manipulation capabilities. Although some projects have started small-scale deployments in highly controlled factory settings (such as Figure × BMW, Agility Digit), and it is expected that more vendors (such as 1X) will enter early distribution from 2026, these are still restricted applications in a "narrow-scenario, single-task" manner, rather than a truly universal labor force deployment. Overall, it will still take several years to scale and commercialize. Key bottlenecks include: control challenges such as multi-degree-of-freedom coordination and real-time dynamic balance; energy consumption and endurance issues limited by battery energy density and drive efficiency; perception-decision chains that are prone to instability and difficult to generalize in open environments; significant data gaps (difficult to support general policy training); unresolved cross-body migration; and the hardware supply chain and cost curve (especially outside China) still pose real-world barriers, further increasing the difficulty of large-scale, low-cost deployment.

The future commercialization path is expected to go through three stages: in the short term, mainly focusing on Demo-as-a-Service, relying on pilots and subsidies; in the medium term evolving into Robotics-as-a-Service (RaaS), building a task and skill ecosystem; and in the long term, with a core focus on Labor Cloud and Intelligent Subscription Services, shifting the value proposition from hardware manufacturing to software and service networks. In general, humanoid robots are in a critical transition period from demonstration to self-learning, and whether they can overcome the triple barriers of control, cost, and algorithms will determine whether they can truly achieve embodied intelligence.

II. AI × Robotics: Dawn of the Embodied Intelligence Era

Traditional automation mainly relies on pre-programming and pipeline-style control (such as the perception-planning-control DSOP architecture), which can only reliably operate in a structured environment. However, the real world is more complex and dynamic. The new generation of embodied intelligence follows a different paradigm: through large models and unified representation learning, robots are endowed with cross-scenario "understand-predict-act" capabilities. Embodied intelligence emphasizes the dynamic coupling of body (hardware) + brain (model) + environment (interaction), where the robot is the vessel, and intelligence is the core.

Generative AI belongs to the language world of intelligence, excelling in understanding symbols and semantics; embodied intelligence belongs to the real-world intelligence, mastering perception and action. The two correspond to the "brain" and "body," representing the two parallel storylines of AI evolution. In terms of intelligence levels, embodied intelligence is higher-order than generative AI, but its maturity is still significantly behind. Large Language Models (LLMs) rely on the vast Internet corpus to form a clear "data → compute → deploy" closed loop; whereas robot intelligence requires first-person perspective, multimodal, and action-coupled data—including remote control trajectories, first-person view videos, spatial maps, operation sequences, etc., which are inherently non-existent and must be generated through real interaction or high-fidelity simulation, making them scarcer and more expensive. Although simulated and synthesized data are somewhat helpful, they cannot replace real sensor-motion experiences. This is also the reason why companies like Tesla and Figure must build their own teleoperation data factories and why third-party data labeling factories are emerging in Southeast Asia. In short: LLMs learn from existing data, while robots must "create" data through interaction with the physical world. In the next 5–10 years, the two will deeply integrate on Vision-Language-Action models and Embodied Agent architectures—LLMs will handle high-level cognition and planning, while robots will be in charge of real-world execution, forming a bidirectional loop between data and action, jointly propelling AI from "language intelligence" towards truly general intelligence (AGI).

The core technology stack of Embodied Intelligence can be seen as a bottom-up intelligent stack: VLA (Perception Fusion), RL/IL/SSL (Intelligent Learning), Sim2Real (Reality Transfer), World Model (Cognitive Modeling), and Multi-Agent Collaboration and Memory Reasoning (Swarm & Reasoning). Among these, VLA and RL/IL/SSL act as the "engine" of Embodied Intelligence, determining its implementation and commercialization; Sim2Real and World Model are key technologies that bridge virtual training and real-world execution; multi-agent collaboration and memory reasoning represent a higher level of group and metacognitive evolution.

Perception Understanding: Vision–Language–Action Model

The VLA model integrates the Vision–Language–Action three channels, allowing the robot to understand intent from human language and translate it into specific operational behavior. Its execution process includes semantic parsing, target recognition (locating target objects from visual inputs), and path planning with action execution, thereby achieving a closed-loop of "understand semantics—perceive the world—complete tasks," which is a key breakthrough in Embodied Intelligence. Prominent projects such as Google RT-X, Meta Ego-Exo, and Figure Helix demonstrate cutting-edge directions such as cross-modal understanding, immersive perception, and language-driven control.

Vision-Language-Action Model Universal Architecture

Currently, VLA is still in its early stages, facing four core bottlenecks:

1) Semantic Ambiguity and Weak Task Generalization: Models struggle to understand ambiguous, open-ended instructions;

2) Unstable Visual and Action Alignment: Perception errors are amplified in path planning and execution;

3) Scarce and Non-Standard Multimodal Data: High costs of collection and annotation make it difficult to form a scalable data flywheel;

4) Temporal and Spatial Challenge of Long-term Tasks: When tasks span a long period, it leads to insufficient planning and memory capabilities. On the other hand, when the spatial scope is too large, it requires the model to reason about things "beyond its view." Currently, VLA lacks a stable world model and cross-spatial reasoning ability.

These issues collectively limit VLA's cross-scenario generalization ability and the scalability of its deployment.

Intelligent Learning: Self-Supervised Learning (SSL), Imitation Learning (IL), and Reinforcement Learning (RL)

· Self-Supervised Learning: Automatically extracting semantic features from perceptual data to help robots "understand the world." Essentially, it teaches machines to observe and represent.

· Imitation Learning: Rapidly acquiring basic skills by imitating human demonstrations or expert examples. Essentially, it teaches machines to perform tasks like humans.

· Reinforcement Learning: Through a "reward-punishment" mechanism, robots optimize their action strategies through continuous trial and error. Essentially, it teaches machines to grow through trial and error.

In Embodied AI, Self-Supervised Learning (SSL) aims to enable robots to predict state changes and physical laws through perceptual data, thereby understanding the causal structure of the world; Reinforcement Learning (RL) serves as the core engine of intelligence, driving robots to master complex behaviors such as walking, grasping, and obstacle avoidance through interaction with the environment and reward-based trial-and-error optimization; Imitation Learning (IL) accelerates this process through human demonstrations, allowing robots to quickly acquire action priors. The current mainstream direction is to combine the three, building a hierarchical learning framework: SSL provides foundational representations, IL imparts human priors, RL drives policy optimization to balance efficiency and stability, collectively forming the core mechanism of embodied intelligence from understanding to action.

Reality Transfer: Sim2Real—Crossing from Simulation to Reality

Sim2Real (Simulation to Reality) enables robots to train in a virtual environment and then transfer to the real world. It utilizes high-fidelity simulation environments (such as NVIDIA Isaac Sim & Omniverse, DeepMind MuJoCo) to generate large-scale interactive data, significantly reducing training costs and hardware wear. Its core lies in bridging the "simulation reality gap," with key methods including:

· Domain Randomization: Randomly adjusting parameters such as lighting, friction, noise, etc., in simulation to improve model generalization;

· Physics Consistency Calibration: Using real sensor data to calibrate the simulation engine, enhancing physical realism;

· Adaptive Fine-tuning: Conducting rapid retraining in a real environment to achieve stable transfer.

Sim2Real is a central link for embodied intelligence deployment, enabling AI models to learn the "perception-decision-control" loop in a safe, low-cost virtual world. While simulation training in Sim2Real has matured (e.g., NVIDIA Isaac Sim, MuJoCo), real-world transfer is still constrained by the Reality Gap, high computation power and annotation costs, as well as insufficient generalization and security in open environments. Nonetheless, Simulation-as-a-Service (SimaaS) is becoming the lightest yet most strategically valuable infrastructure for the embodied intelligence era, with a business model including Platform as a Service (PaaS), Data as a Service (DaaS), and Validation as a Service (VaaS).

Cognitive Modeling: World Model - Robot's "Inner World"

World Model is the "inner brain" of embodied intelligence, allowing robots to internally simulate the environment and action consequences for prediction and inference. By learning the dynamic rules of the environment, it builds a predictable internal representation, enabling the agent to "pre-play" results before execution, evolving from a passive executor to an active reasoner. Representative projects include DeepMind Dreamer, Google Gemini + RT-2, Tesla FSD V12, NVIDIA WorldSim, etc. Typical technical paths include:

· Latent Dynamics Modeling: Compressing high-dimensional perception into a latent state space;

· Imagination-based Planning: Virtual trial-and-error and path prediction in the model.

· Model-Driven Reinforcement Learning (Model-based RL): Using a world model to replace the real environment to reduce training costs.

The World Model is at the theoretical forefront of embodied intelligence, representing the core path for robots to transition from "reactive" to "predictive" intelligence. However, it is still constrained by challenges such as modeling complexity, instability in long-term predictions, and the lack of a unified standard.

Collective Intelligence and Memory Inference: From Individual Action to Collaborative Cognition

Multi-Agent Collaboration (Multi-Agent Systems) and Memory Inference (Memory & Reasoning) represent two important directions in the evolution of embodied intelligence from "individual intelligence" to "collective intelligence" and "cognitive intelligence." Together, they support the intelligent system's collaborative learning and long-term adaptability.

Multi-Agent Collaboration (Swarm / Cooperative RL):

Refers to multiple agents achieving collaborative decision-making and task allocation in a shared environment through distributed or cooperative reinforcement learning. This direction has a solid research foundation; for example, the OpenAI Hide-and-Seek experiment demonstrated spontaneous cooperation among multiple agents and strategy emergence. The DeepMind QMIX and MADDPG algorithms provide a centralized training and decentralized execution collaborative framework. Such methods have been applied and validated in scenarios such as warehouse robot scheduling, inspection, and cluster control.

Memory & Reasoning:

Focuses on enabling intelligent agents to have long-term memory, situational understanding, and causal reasoning abilities, which are key directions for achieving cross-task transfer and self-planning. Typical research includes DeepMind's Gato (a multi-task embodied agent integrating perception, language, and control) and the DeepMind Dreamer series (based on world model imaginative planning), as well as open-ended embodied agents like Voyager, which achieve continuous learning through external memory and self-evolution. These systems lay the foundation for robots to have the ability to "remember the past and infer the future."

Global Embodied Intelligence Industry Landscape: Co-opetition Coexistence

The global robotics industry is currently in a period of "cooperation-led, competition-deepened." China's supply chain efficiency, the U.S.'s AI capabilities, Japan's component precision, and Europe's industrial standards collectively shape the long-term landscape of the global robotics industry.

· United States maintains a leading position in cutting-edge AI model and software field (DeepMind, OpenAI, NVIDIA), but this advantage has not extended to robot hardware. Chinese manufacturers have a greater advantage in iteration speed and real-world performance. The United States is promoting industry reshoring through the CHIPS Act and the Inflation Reduction Act (IRA).

· China has established a leading edge in the field of components, automated factories, and humanoid robots through scale manufacturing, vertical integration, and policy drive. Chinese companies excel in hardware and supply chain capabilities, with companies like Ubtech and UBTech having achieved mass production and extending towards intelligent decision-making. However, there is still a significant gap between China and the United States in terms of algorithms and simulation training.

· Japan has long monopolized high-precision components and motion control technology. The industrial system is robust, but AI model integration is still in its early stages, and the pace of innovation is relatively stable.

· South Korea distinguishes itself in the popularization of consumer-grade robots—led by companies like LG, NAVER Labs—and has a mature and robust service robot ecosystem.

· Europe has a well-developed engineering system and safety standards. Companies like 1X Robotics remain active in the research and development layer, but some manufacturing processes have been relocated offshore, and the innovation focus tends towards collaboration and standardization.

III. Robotics × AI × Web3: Narrative Vision and Realistic Path

By 2025, a new narrative emerges in the Web3 industry regarding the integration of robotics and AI. Although Web3 is seen as the underlying protocol for a decentralized machine economy, the value and feasibility of its combination at different levels still vary significantly:

· Hardware Manufacturing and Service Layer: Capital-intensive with weak data loops, Web3 currently only plays a supporting role in peripheral areas such as supply chain finance or equipment leasing;

· Simulation and Software Ecosystem Layer: There is a high degree of alignment, as simulation data and training tasks can be immutably recorded on the blockchain, and intelligent entities and skill modules can be tokenized through NFTs or Agent Tokens;

· Platform Layer: Decentralized labor and collaboration networks are showing the greatest potential—Web3 can gradually build a trustworthy "machine labor market" through integrated mechanisms for identity, incentives, and governance, laying the institutional groundwork for the future machine economy.

From a long-term perspective, the Collaboration and Platform Layer is the most valuable direction for Web3 in the integration of robotics and AI. As robots gradually acquire perception, language, and learning abilities, they are evolving into intelligent entities capable of autonomous decision-making, collaboration, and creating economic value. These "intelligent workers" truly participate in the economic system, but still need to overcome four core thresholds of Identity, Trust, Incentive, and Governance.

· At the Identity layer, machines need to have a verifiable and traceable digital identity. Through Machine DID, each robot, sensor, or drone can generate a unique verifiable "identity card" on the chain, linking its ownership, behavior records, and permission scope to enable secure interaction and responsibility assignment.

· At the Trust layer, the key is to make "robotic labor" verifiable, measurable, and quantifiable. By leveraging smart contracts, oracle, and auditing mechanisms, combined with Physical Proof of Work (PoPW), Trusted Execution Environment (TEE), and Zero-Knowledge Proof (ZKP), it is possible to ensure the authenticity and traceability of task execution, enabling machine behavior to have economic accounting value.

· At the Incentive layer, Web3 enables automatic settlement and value transfer between machines through a Token incentive system, account abstraction, and state channels. Robots can complete tasks such as renting out computing power and sharing data through micropayments, and ensure task fulfillment through staking and penalty mechanisms. With smart contracts and oracles, a decentralized "machine collaboration market" can be established without the need for manual scheduling.

· At the Governance layer, once machines have long-term autonomous capabilities, Web3 provides a transparent and programmable governance framework: using DAO governance to collectively decide on system parameters and using multi-signature and reputation mechanisms to maintain security and order. In the long run, this will drive the machine society towards the "algorithmic governance" stage—where humans set goals and boundaries, and machines maintain incentives and balances through contracts.

The Ultimate Vision of Web3 and Robotics Integration: Real-world Evaluation Network—a "real-world inference engine" composed of distributed robots continuously testing and benchmarking model capabilities in diverse and complex physical environments; and the Robot Labor Market—robots globally performing verifiable real-world tasks, earning income through on-chain settlements, and reinvesting the value into computing power or hardware upgrades.

From a practical standpoint, the convergence of Embodied Intelligence with Web3 is still in its early exploratory phase, with decentralized machine intelligence economies more focused on narrative and community-driven aspects. The practical directions for the integration that hold tangible potential mainly manifest in the following three areas:

(1) Data Crowdsourcing and Empowerment — Web3 incentivizes contributors to upload real-world data through on-chain rewards and traceability mechanisms;

(2) Global Long Tail Participation — Cross-border micropayments and micro-incentive mechanisms effectively reduce data collection and distribution costs;

(3) Financialization and Collaborative Innovation — The DAO model can drive robot assetization, revenue securitization, and inter-robot settlement mechanisms.

Overall, the short term mainly focuses on data collection and incentive layers; in the mid-term, breakthroughs are expected in "stablecoin payments + long-tail data aggregation" and RaaS assetization and settlement layers; in the long term, if humanoid robots achieve scale, Web3 may become the institutional foundation for machine ownership, income distribution, and governance, driving the formation of a truly decentralized machine economy.

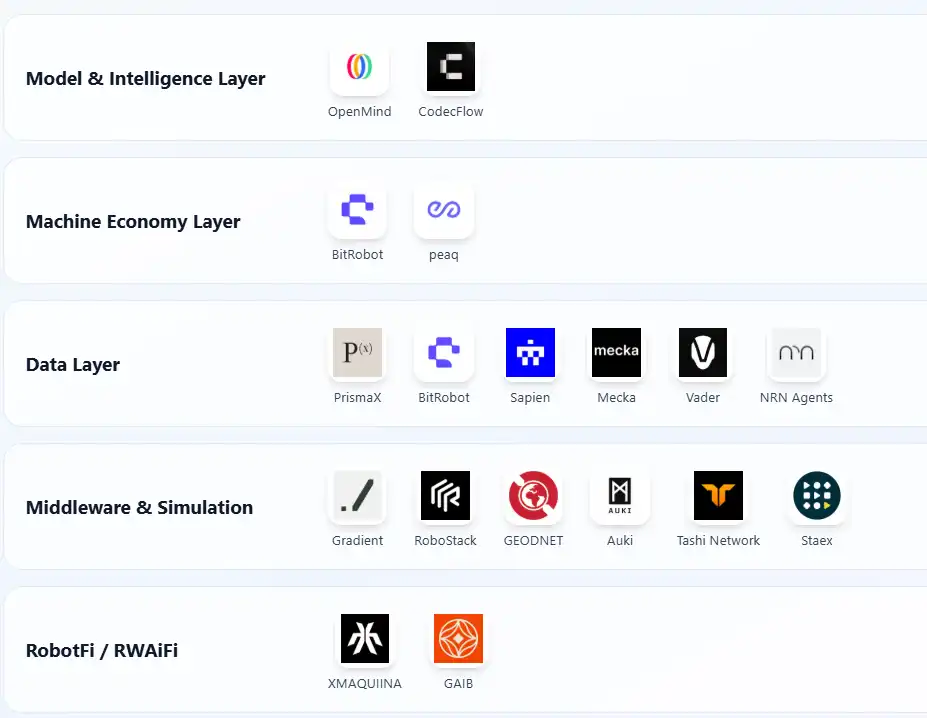

IV. Web3 Robot Ecosystem Map and Featured Cases

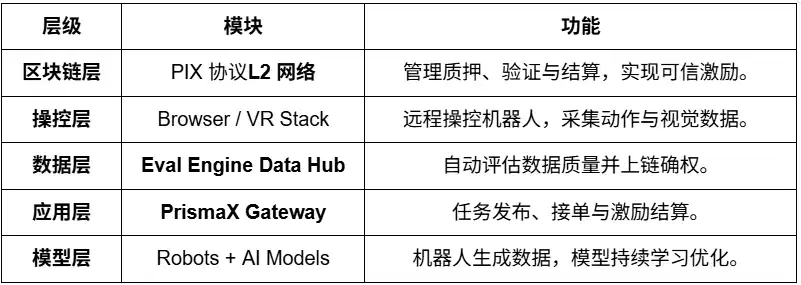

Based on the three criteria of "verifiable progress, technical openness, industry relevance," representative Web3 × Robotics projects are categorized according to a five-layer architecture: Model Intelligence Layer, Machine Economy Layer, Data Collection Layer, Perception and Simulation Infrastructure Layer, and Robot Asset Revenue Layer. To maintain objectivity, we have excluded projects that are clearly "riding the hype" or lack sufficient information; any oversights are welcome for correction.

Model & Intelligence Layer

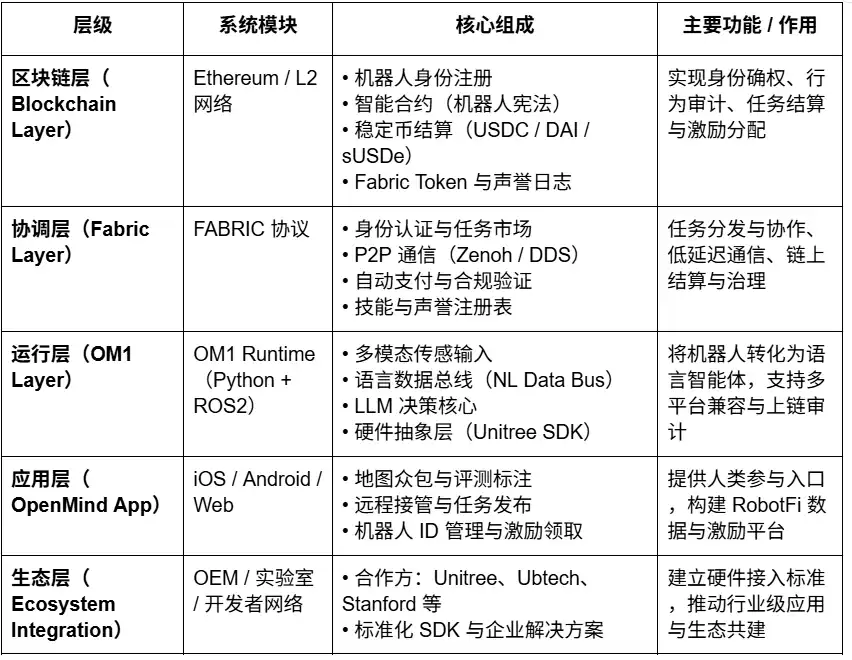

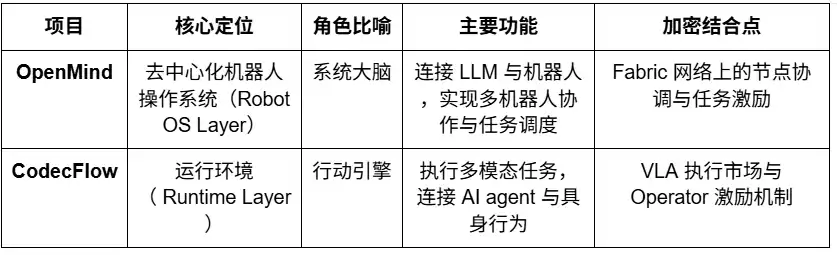

Openmind - Building Android for Robots

· OpenMind is an open-source operating system (Robot OS) aimed at Embodied Intelligence and robot control, with the goal of building the world's first decentralized robot operating environment and development platform. The core of the project includes two major components:

· OM1: a modular open-source AI agent runtime (AI Runtime Layer) built on ROS2, used to orchestrate perception, planning, and action pipelines serving digital and physical robots;

· FABRIC: Fabric Coordination Layer, a distributed coordination layer connecting cloud computing power, models, and physical robots, enabling developers to control and train robots in a unified environment.

The core of OpenMind lies in serving as an intelligent intermediary layer between LLM (Large Language Models) and the robot world, enabling language intelligence to truly transform into Embodied Intelligence, establishing an intelligent framework from understanding (Language → Action) to alignment (Blockchain → Rules). The multi-layered OpenMind system achieves a complete collaborative loop: humans provide feedback and annotations through the OpenMind App (RLHF data), the Fabric Network handles identity verification, task assignment, and settlement coordination, OM1 Robots execute tasks and adhere to the "Robot Constitution" on the blockchain to complete behavior audits and payments, thereby realizing a decentralized human feedback → task collaboration → on-chain settlement machine collaboration network.

Project Progress and Realistic Assessment

OpenMind is in the early stage of "technically feasible, commercially not implemented." The core system OM1 Runtime has been open-sourced on GitHub, can run on multiple platforms, and supports multimodal input. Through a Natural Language Data Bus (NLDB), it achieves task understanding from language to action, showing high originality but still experimental. The Fabric network and on-chain settlement have only completed interface-level design.

Ecologically, the project has collaborated with open hardware such as Unitree, Ubtech, TurtleBot, and universities like Stanford, Oxford, Seoul Robotics, mainly for education and research validation, with no industrial landing yet. The app has launched a test version, but the incentive and task functions are still in the early stages.

In terms of the business model, OpenMind has built a three-tier ecosystem of OM1 (open-source system) + Fabric (settlement protocol) + Skill Marketplace (incentive layer), currently with no revenue, relying on around $20 million in early-stage funding (Pantera, Coinbase Ventures, DCG). Overall, the technology is advanced, but commercialization and the ecosystem are still in the early stages. If Fabric successfully lands, it could become the "Android of the Embodied Intelligence era," but with a long cycle, high risk, and strong hardware dependency.

CodecFlow - The Execution Engine for Robotics

CodecFlow is a decentralized execution layer protocol (Fabric) based on the Solana network, designed to provide an on-demand runtime environment for AI agents and robotic systems, enabling each intelligent agent to have an "Instant Machine." The core of the project consists of three main modules:

· Fabric: Cross-cloud computing power aggregation layer (Weaver + Shuttle + Gauge), capable of generating secure virtual machines, GPU containers, or robot control nodes for AI tasks within seconds;

· optr SDK: Agent execution framework (Python interface) for creating an "Operator" that can operate on a desktop, in simulation, or with real robots;

· Token Incentives: On-chain incentives and payment layer that connects compute providers, agent developers, and automation task users, forming a decentralized compute and task marketplace.

The core goal of CodecFlow is to build a "decentralized execution base for AI and robotic operators," allowing any intelligent agent to securely run in any environment (Windows / Linux / ROS / MuJoCo / robot controller) and achieve a universal execution architecture from Compute Scheduling (Fabric) → System Layer (System Layer) → Perception and Action (VLA Operator).

Project Progress and Real-World Assessment

An early version of the Fabric framework (Go) and optr SDK (Python) has been released, enabling the launch of isolated compute instances in a web or command-line environment. The Operator Marketplace is expected to launch by the end of 2025, positioned as a decentralized execution layer for AI compute, primarily serving AI developers, robotic research teams, and automation companies.

Machine Economy Layer

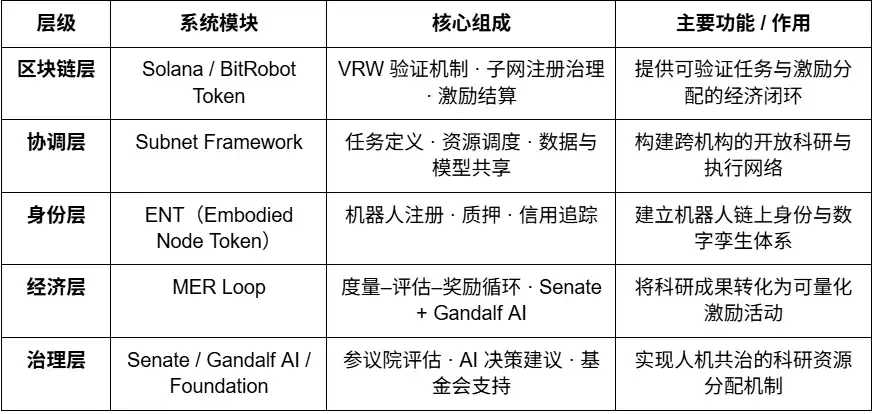

BitRobot - The World's Open Robotics Lab

BitRobot is a decentralized research and collaboration network aimed at Embodied AI and robotics development, co-founded by FrodoBots Labs and Protocol Labs. Its core vision is to: enable an open architecture through "Subnets + Incentives + Verifiable Robotic Work (VRW)", with key roles including:

· Define and verify the real contribution of each robotic task through the VRW (Verifiable Robotic Work) standard;

· Provide robots with on-chain identity and economic responsibility through ENT (Embodied Node Token);

· Organize cross-border collaboration of research, computing power, devices, and operators through Subnets;

· Achieve "human-robot governance" through Senate + Gandalf AI for incentive-based decision-making and research governance.

Since the release of the white paper in 2025, BitRobot has launched multiple subnets (such as SN/01 ET Fugi, SN/05 SeeSaw by Virtuals Protocol), enabling decentralized remote control and real-world data collection, and has introduced the $5M Grand Challenges Fund to drive global model development through research competitions.

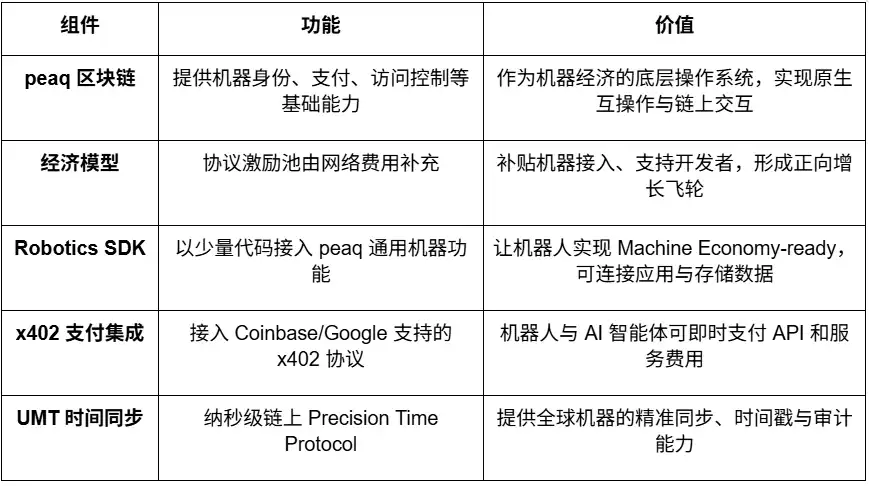

peaq – The Economy of Things

peaq is a Layer-1 blockchain designed for the machine economy, providing fundamental capabilities such as machine identity, on-chain wallets, access control, and nanosecond-level time synchronization (Universal Machine Time) for millions of robots and devices. Its Robotics SDK allows developers to make robots "economy-ready" with minimal code, enabling cross-manufacturer, cross-system interoperability and interaction.

Currently, peaq has launched the world's first tokenized robot farm, supporting over 60 real-world robotic applications. Its tokenization framework helps robot companies raise funds for capital-intensive hardware and expands participation from traditional B2B/B2C to a wider community level. With a protocol-level incentive pool fueled by network fees, peaq can subsidize new device onboarding and support developers, creating an economic flywheel to accelerate the expansion of robotics and physical AI projects.

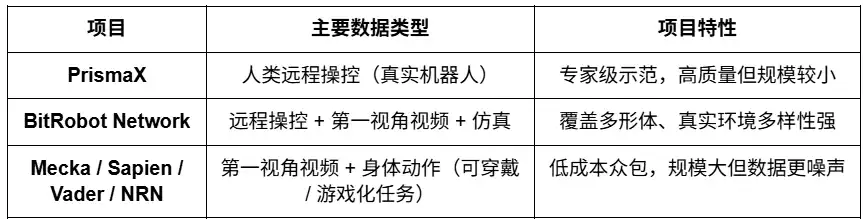

Data Layer

Designed to address the scarcity and costliness of high-quality real-world data in embodied intelligence training. By collecting and generating human-robot interaction data through various pathways, including remote operation (PrismaX, BitRobot Network), first-person view and motion capture (Mecka, BitRobot Network, Sapien, Vader, NRN), and simulation and synthetic data (BitRobot Network), it provides a scalable and generalizable training foundation for robotic models.

It is important to note that Web3 is not adept at "data production"—in terms of hardware, algorithms, and collection efficiency, Web2 giants far surpass any DePIN project. Its true value lies in reshaping data distribution and incentive mechanisms. Based on a "stablecoin payment network + crowdsourcing model," it achieves low-cost micropayments, contribution traceability, and automatic revenue sharing through a permissionless incentive system and on-chain provenance mechanism. However, open crowdsourcing still faces challenges in quality and demand loops—data quality varies, lacks effective validation, and stable demand.

PrismaX

PrismaX is a decentralized remote control and data economy network for embodied AI, aiming to build a "global robot labor market" where human operators, robot devices, and AI models evolve collaboratively through an on-chain incentive system. The project's core includes two main components:

· Teleoperation Stack—a remote control system (browser/VR interface + SDK) that connects global robotic arms and service robots to enable real-time human control and data collection;

· Eval Engine—a data evaluation and validation engine (CLIP + DINOv2 + optical flow semantic scoring) that generates quality scores for each operation trajectory and settles them on-chain.

Through a decentralized incentive mechanism, PrismaX transforms human operational behavior into machine learning data, building a complete closed loop from Remote Control → Data Collection → Model Training → On-chain Settlement, achieving a circular economy where "human labor is data asset."

Project Progress and Reality Assessment: PrismaX has launched a test version in August 2025, where users can remotely control a robotic arm to perform grasping experiments and generate training data. The Eval Engine is operational internally. Overall, PrismaX demonstrates a high level of technical implementation and a clear focus, serving as a key intermediary connecting "human operation × AI model × blockchain settlement." Its long-term potential is expected to become a "decentralized labor and data protocol for embodied intelligence era," but in the short term, it still faces scalability challenges.

BitRobot Network

The BitRobot Network achieves multi-source data collection for video, remote control, and simulation through its subnets. SN/01 ET Fugi allows users to remotely control robots to complete tasks, collecting navigation and perception data in an interactive manner akin to a "real-world Pokémon Go." This gameplay has led to the creation of the FrodoBots-2K dataset, one of the largest human-robot navigation open datasets, utilized by institutions such as UC Berkeley RAIL and Google DeepMind. SN/05 SeeSaw (Virtual Protocol) conducts large-scale crowdsourced first-person view video data collection in real-world environments using iPhones. Other announced subnets like RoboCap and Rayvo focus on capturing first-person view video data using low-cost physical devices.

Mecka

Mecka is a robot data company that employs gamified mobile data collection and custom hardware devices to crowdsource first-person view video, human motion data, and task demonstrations for building large-scale multimodal datasets to support the training of embodied intelligence models.

Sapien

Sapien is a crowdsourcing platform centered around "human motion data driving robot intelligence." It collects human body movement, posture, and interaction data through wearable devices and mobile apps for training embodied intelligence models. The project aims to build the world's largest human motion data network, making natural human behaviors a foundational data source for robot learning and generalization.

Vader

Vader crowdsources first-person-view videos with task demonstrations through its real-world MMO app EgoPlay: users record daily activities from a first-person perspective and earn $VADER rewards. Its ORN data pipeline can transform raw POV footage into privacy-preserving structured datasets, including action labels and semantic descriptions, directly usable for humanoid robot policy training.

NRN Agents

A gamified embodiment RL data platform that crowdsources human demonstration data through browser-based robot control and simulated competitions. NRN generates long-tail behavior trajectories through "gamified" tasks for imitation learning and reinforcement learning, serving as an extensible data primitive to support sim-to-real policy training.

Embodied Intelligence Data Collection Layer Projects Comparison

Perception & Simulation (Middleware & Simulation)

The perception and simulation layer provide core infrastructure for robots to connect the physical world with intelligent decision-making, including capabilities such as localization, communication, spatial modeling, and simulation training. It serves as the "middle layer framework" to build large-scale embodied intelligence systems. Currently, this field is still in the early exploration stage, with projects taking differentiated approaches in high-precision localization, shared-space computing, protocol standardization, and distributed simulation, without a unified standard or interoperable ecosystem.

Middleware & Spatial Infrastructure (Middleware & Spatial Infra)

The core capabilities of robots—navigation, localization, connectivity, and spatial modeling—form the critical bridge between the physical world and intelligent decision-making. While the broader DePIN projects (Silencio, WeatherXM, DIMO) are starting to mention "robots," the following projects are most directly related to embodied intelligence.

RoboStack – Cloud-Native Robot Operating Stack

RoboStack is a cloud-native robot middleware that enables real-time scheduling, remote control, and cross-platform interoperability of robot tasks through the Robot Context Protocol (RCP). It also offers cloud-based simulation, workflow orchestration, and agent access capabilities.

GEODNET – Decentralized GNSS Network

GEODNET is a global decentralized GNSS network that provides centimeter-level RTK high-precision positioning. Through distributed base stations and on-chain incentives, it offers a real-time "geospatial layer" for drones, autonomous driving, and robotics.

Auki – Posemesh for Spatial Computing

Auki has built a decentralized Posemesh spatial computing network that generates real-time 3D environmental maps through crowdsourced sensors and computing nodes, providing a shared space reference for AR, robot navigation, and multi-device collaboration. It serves as a key infrastructure connecting virtual space with real-world scenarios, driving the convergence of AR × Robotics.

Tashi Network—Real-Time Robot Grid Collaboration Network

A decentralized real-time grid network that achieves sub-30ms consensus, low-latency sensor exchange, and multi-robot state synchronization. Its MeshNet SDK supports shared SLAM, collective collaboration, and robust map updates, providing high-performance real-time collaborative layer for embodied AI.

Staex—Decentralized Connectivity and Telemetry Network

Originating from the research department of Deutsche Telekom, this decentralized connectivity layer offers secure communication, trusted telemetry, and device-to-cloud routing capabilities, enabling robot fleets to reliably exchange data and collaborate across different operational parties.

Simulation and Training System (Distributed Simulation & Learning)

Gradient - Towards Open Intelligence

Gradient is an AI lab dedicated to building "Open Intelligence" by leveraging decentralized infrastructure to achieve distributed training, inference, verification, and simulation. Its current technology stack includes Parallax (distributed inference), Echo (distributed reinforcement learning and multi-agent training), and Gradient Cloud (enterprise-oriented AI solutions). In the robotics domain, the Mirage platform offers distributed simulation, dynamic interactive environments, and large-scale parallel learning capabilities for embodied intelligence training to accelerate the deployment of world models and universal policies. Mirage is exploring potential collaboration directions with NVIDIA's Newton engine.

Robot Asset Yield Layer (RobotFi / RWAiFi)

This layer focuses on the key step of transforming robots from a "productive tool" to a "financializable asset," building the financial infrastructure of the machine economy through asset tokenization, revenue distribution, and decentralized governance. Representative projects include:

XmaquinaDAO – Physical AI DAO

XMAQUINA is a decentralized ecosystem that provides a high-liquidity channel for global users to participate in top-tier humanoid robot and embodied AI companies, bringing opportunities that were previously only available to venture capital firms onto the chain. Its token DEUS serves as both a liquidity index asset and a governance mechanism, used to coordinate treasury allocations and ecosystem development. Through the DAO Portal and Machine Economy Launchpad, the community can participate on-chain through the tokenization of machine assets and structured involvement, collectively holding and supporting emerging Physical AI projects.

GAIB – The Economic Layer for AI Infrastructure

GAIB is dedicated to providing a unified economic layer for tangible AI infrastructure such as GPUs and robots, connecting decentralized capital with real AI infrastructure assets to build a verifiable, composable, and revenue-generating intelligent economic system.

In the realm of robotics, GAIB does not aim to "sell robot tokens" but rather to achieve the transformation of "realized cash flow → on-chain composable income-generating assets" by securitizing robot equipment and operational contracts (RaaS, data collection, teleoperation, etc.) on the blockchain. This system covers hardware financing (leasing / pledging), operational cash flows (RaaS / data services), and data revenue streams (licensing / contracts) to make robot assets and their cash flows measurable, pricable, and tradable.

GAIB uses AID / sAID as the settlement and revenue mechanism, safeguarding robust returns through structured risk management mechanisms (overcollateralization, reserves, and insurance), and plans to integrate into DeFi derivatives and liquidity markets in the long term, forming a financial loop from "robot assets" to "composable income-generating assets." The goal is to become the economic backbone of the AI era.

Web3 Robotics Ecosystem Map:

5. Summary and Outlook: Realistic Challenges and Long-Term Opportunities

From a long-term perspective, the integration of Robotics × AI × Web3 aims to build a decentralized machine economy (DeRobot Economy), driving embodied intelligence from "standalone automation" towards "empowerment, settlement, and governance" of networked collaboration. The core logic is to establish a self-reinforcing mechanism through "Token → Deployment → Data → Value Redistribution", enabling robots, sensors, and compute nodes to achieve ownership, transaction, and revenue sharing.

However, in the current stage, this model is still in its early exploration phase, far from forming a stable cash flow and scalable business loop. Most projects remain at the narrative level with limited actual deployment. Robot manufacturing and operation are capital-intensive industries, and infrastructure expansion cannot rely solely on token incentives. While on-chain financial design is composable, it has yet to address the risk pricing and return realization of real-world assets. Therefore, the so-called "machine network self-reinforcement" remains idealistic, and its business model awaits practical validation.

· Model & Intelligence Layer is currently the most promising direction for long-term value. Represented by OpenMind's open-source robot operating system, it attempts to break through closed ecosystems, unify multi-robot collaboration, and provide a language-to-action interface. Its technical vision is clear, the system is comprehensive, but the engineering effort is immense, and the validation cycle is long, without yet forming an industry-level positive feedback loop.

· Machine Economy Layer is still in its early stage, with a limited number of robots in reality, and DID identity and incentive networks have yet to form a coherent cycle. We are far from realizing the "machine labor economy." In the future, only with widespread deployment of embodied intelligence, will the economic effects of on-chain identity, settlement, and collaborative networks truly emerge.

· Data Layer has a relatively lower barrier of entry, currently being the most commercially viable direction. Embodied intelligence data collection requires high spatiotemporal continuity and action semantics accuracy, determining its quality and reusability. Balancing "crowdsourcing scale" and "data reliability" is a core industry challenge. PrismaX initially targets B2B needs, distributing tasks for collection and validation to some extent providing a replicable template, but ecosystem scale and data transactions will still need time to accumulate.

· Middleware & Simulation Layer is still in the technical validation phase, lacking unified standards and interfaces to form an interoperable ecosystem. The migration of simulation results to real environments is challenging to standardize, limiting Sim2Real efficiency.

· Asset Revenue Layer (RobotFi / RWAiFi) Web3 primarily plays an auxiliary role in areas such as supply chain finance, equipment leasing, and investment governance, enhancing transparency and settlement efficiency rather than reshaping industry logic.

Of course, we believe that the intersection of Robotics × AI × Web3 still represents the origin of the next-generation intelligent economic system. It is not only a fusion of technological paradigms but also an opportunity to restructure production relations: when machines have identity, incentives, and governance mechanisms, human-machine collaboration will transition from local automation to networked autonomy. In the short term, this direction is still primarily focused on narrative and experimentation, but the institutional and incentive framework it establishes is laying the foundation for the future economic order of a machine society. From a long-term perspective, the integration of embodied intelligence and Web3 will reshape the boundaries of value creation—making intelligent entities true economic actors who are empowerable, collaborative, and revenue-generating.

This article is a contributed submission and does not represent the views of BlockBeats.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

What Is Zero Knowledge Proof? Here’s How Its Compute and Storage Beat Simple Staking Models!

Bitcoin bots compete for funds in compromised wallet linked to block reward identifier