Understanding Vitalik's Long Essay: Why Should Smart People Stick to "Dumb Rules"?

Those "galaxy brain" theories that seem to explain everything are often the most dangerous universal excuses. On the contrary, those rigid and dogmatic "high-resistance" rules are actually our last line of defense against self-deception.

Those "galaxy brain" theories that seem to explain everything are often the most dangerous universal excuses. In contrast, those rules that sound rigid and dogmatic—"high resistance" rules—are actually our last line of defense against self-deception.

Written by: Zhixiong Pan

Vitalik published an article a few weeks ago titled "Galaxy Brain Resistance", which is actually quite obscure and difficult to understand, and I haven't seen any good interpretations, so I'll give it a try.

After all, I saw that Karpathy, the creator of the term Vibe Coding, also read this article and took notes, so there must be something special about it.

First, let's talk about the title—what do Galaxy Brain and Resistance mean? Once you understand the title, you'll basically get what the article is about.

1️⃣ The Chinese translation of Galaxy Brain is "银河大脑" (literally "galaxy brain"), but it actually comes from an internet meme, similar to an image combining (🌌🧠), which you've probably seen before.

At first, it was of course a compliment, used to praise someone's brilliant ideas—in other words, smart. But as its usage became widespread, it gradually turned into a kind of irony, roughly meaning "overthinking it, logic has gone too far."

Here, when Vitalik mentions 🌌🧠, he specifically refers to the behavior of "using high intelligence for mental gymnastics, forcing unreasonable things to sound like they make perfect sense." For example:

- Clearly laying off a lot of people to save money, but insisting it's about "delivering high-quality talent to society."

- Clearly issuing worthless tokens to scam retail investors, but claiming it's about "empowering the global economy through decentralized governance."

All of these can be considered "galaxy brain" thinking.

2️⃣ So what does Resistance mean? This concept is easy to get confused about. In popular terms, it can be likened to "the ability to resist being led astray" or "the ability to resist being fooled."

So Galaxy Brain Resistance should mean Resistance to [becoming] Galaxy Brain, that is, "the ability to resist (turning into) galaxy brain (nonsense)."

Or more precisely, it describes how easy or difficult it is for a certain way of thinking or argumentation style to be abused to "prove whatever conclusion you want."

So this "resistance" can be aimed at a certain "theory," for example,

- Low-resistance theories: With just a little scrutiny, they can evolve into extremely far-fetched "galaxy brain" logic.

- High-resistance theories: No matter how you scrutinize them, they remain as they are and are hard to twist into absurd logic.

For example, Vitalik says that in his ideal society, the law should have a red line: only when you can clearly explain how a certain behavior causes harm or risk to a specific victim can it be prohibited. This standard has strong Galaxy Brain resistance because it doesn't accept infinitely stretchable or vague reasons like "I subjectively dislike it" or "it's immoral."

3️⃣ Vitalik also gives many examples in the article, even using theories we often hear, such as "longtermism" and "inevitabilism."

"Longtermism" is very susceptible to 🌌🧠-style thinking because its resistance is extremely low—it's basically a "blank check." The "future" is too distant and too vague.

- High-resistance statement: "This tree will grow 5 meters taller in 10 years." This is verifiable and not easy to make up.

- Low-resistance "longtermism": "Although I'm about to do something extremely immoral (like eliminating a group of people or starting a war), it's for the sake of humanity living in a utopia 500 years from now. According to my calculations, the total happiness in the future is infinite, so today's sacrifices are negligible."

See, as long as you stretch the timeline long enough, you can justify any evil act in the present. As Vitalik says: "If your argument can justify anything, then your argument justifies nothing."

However, Vitalik also acknowledges that "the long term is important." What he criticizes is "using excessively vague, unverifiable future benefits to cover up clear present harms."

Another major issue is "inevitabilism."

This is also the favorite self-defense tactic in Silicon Valley and the tech world.

The rhetoric goes like this: "AI replacing human jobs is historically inevitable. Even if I don't do it, someone else will. So my aggressive development of AI is not for profit, but to follow the trend of history."

Where is the resistance low? It perfectly dissolves personal responsibility. Since it's "inevitable," I don't need to be responsible for the destruction I cause.

This is also a typical galaxy brain move: wrapping up personal desires for profit or power as "fulfilling a historical mission."

4️⃣ So what should we do in the face of these "traps for smart people"?

The antidote Vitalik offers is surprisingly simple, even a bit "dumb." He believes that the smarter you are, the more you need high-resistance rules to restrain yourself and prevent your mental gymnastics from going off the rails.

First, stick to "deontological ethics," that is, kindergarten-level moral iron laws.

Don't bother with complicated math about "the future of all humanity"—return to the most rigid principles:

- Don't steal

- Don't kill innocent people

- Don't commit fraud

- Respect others' freedom

These rules have extremely high resistance. Because they are black and white, non-negotiable. When you try to use "longtermism" to justify why you're misappropriating user funds, the rigid rule of "don't steal" will slap you in the face: stealing is stealing, don't make excuses about a great financial revolution.

Second, hold the right "position," even including your physical location.

As the saying goes, where you sit determines how you think. If you hang out every day in the echo chamber of the San Francisco Bay Area, surrounded by AI accelerationists, it's hard to stay clear-headed. Vitalik even gives a high-resistance suggestion at the physical level: don't live in the San Francisco Bay Area.

5️⃣ Summary

Vitalik's article is actually a warning to those extremely smart elites: don't think that just because you're highly intelligent, you can bypass basic moral boundaries.

Those "galaxy brain" theories that seem to explain everything are often the most dangerous universal excuses. In contrast, those rules that sound rigid and dogmatic—"high resistance" rules—are actually our last line of defense against self-deception.

Disclaimer: The content of this article solely reflects the author's opinion and does not represent the platform in any capacity. This article is not intended to serve as a reference for making investment decisions.

You may also like

Iran’s Covert Cryptocurrency Transfers Bypass Sanctions

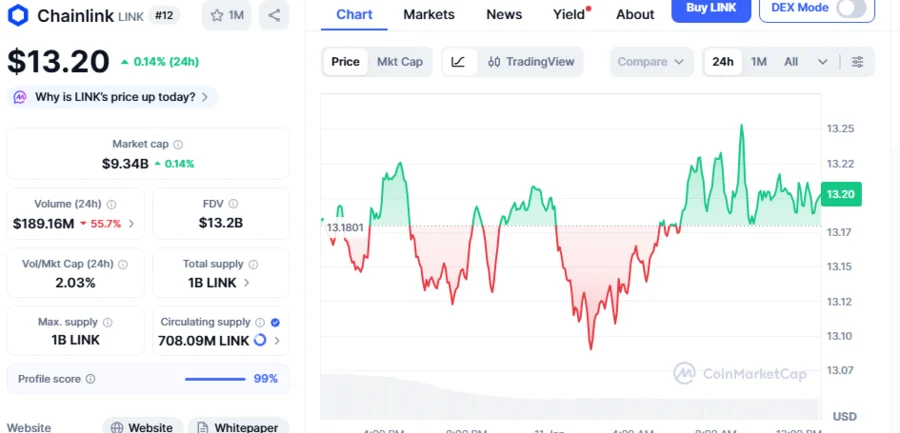

Is Chainlink Heading To $18? Analyst Points Out A Range-Bound Pattern Suggesting 36.3% LINK Rally Amid Growing Buying Pressure

Dan Ives: Massive AI Investments Mark Only the Beginning of the ‘Fourth Industrial Revolution’

Borderlands Mexico: Flexport cautions importers that tariff concerns will remain prominent in 2026